Getting Started with Kubernetes 1.4 using Spring Boot and Couchbase explains how to get started with Kubernetes 1.4 on Amazon Web Services. A Couchbase service is created in the cluster and a Spring Boot application stores a JSON document in the database. It uses kube-up.sh script from the Kubernetes binary download at github.com/kubernetes/kubernetes/releases/download/v1.4.0/kubernetes.tar.gz to start the cluster. This script is capable of creating a Kubernetes cluster with single master only. This is a fundamental flaw of distributed applications where the master becomes a Single Point of Failure.

Meet kops – short for Kubernetes Operations.

This is the easiest way to get a highly-available Kubernetes cluster up and running. The kubectl script is the CLI for running commands against running clusters. Think of kops as kubectl for cluster.

This blog will show how to create a highly-available Kubernetes cluster on Amazon using kops. And once the cluster is created, then it’ll create a Couchbase service on it and run a Spring Boot application to store JSON document in the database.

Many thanks to justinsb, sarahz, razic, jaygorrell, shrugs, bkpandey and others at Kubernetes slack channel for helping me through the details!

Download kops and kubectl

- Download Kops latest release. This blog was tested with 1.4.1 on OSX.Complete set of commands for

kopscan be seen:

12345678910111213141516171819202122232425262728293031323334353637kops-darwin-amd64 --helpkops is kubernetes ops.It allows you to create, destroy, upgrade and maintain clusters.Usage:kops [command]Available Commands:create create resourcesdelete delete clustersdescribe describe objectsedit edit itemsexport export clusters/kubecfgget list or get objectsimport import clustersrolling-update rolling update clusterssecrets Manage secrets & keystoolbox Misc infrequently used commandsupdate update clustersupgrade upgrade clustersversion Print the client version informationFlags:--alsologtostderr log to standard error as well as files--config string config file (default is $HOME/.kops.yaml)--log_backtrace_at traceLocation when logging hits line file:N, emit a stack trace (default :0)--log_dir string If non-empty, write log files in this directory--logtostderr log to standard error instead of files (default false)--name string Name of cluster--state string Location of state storage--stderrthreshold severity logs at or above this threshold go to stderr (default 2)-v, --v Level log level for V logs--vmodule moduleSpec comma-separated list of pattern=N settings for file-filtered loggingUse "kops [command] --help" for more information about a command. - Download

kubectl:

123curl -Lo kubectl http://storage.googleapis.com/kubernetes-release/release/v1.4.1/bin/darwin/amd64/kubectl && chmod +x kubectl - Include

kubectlin yourPATH.

Create Bucket and NS Records on Amazon

There is a bit of setup involved at this time, and hopefully this will get cleaned up over next releases. Bringing up a cluster on AWS provide detailed steps and more background. Here is what the blog followed:

- Pick a domain where Kubernetes cluster will be hosted. This blog uses

kubernetes.arungupta.medomain. You can pick a top level domain or a sub-domain. - Amazon Route 53 is a highly available and scalable DNS service. Login to Amazon Console and created a hosted zone for this domain using Route 53 service.

Created zone looks like:

Created zone looks like: The values shown in the

The values shown in the Valuecolumn are important as they’ll be used later for creating NS records. - Create a S3 bucket using Amazon Console to store cluster configuration – this is called

state store.

- The domain

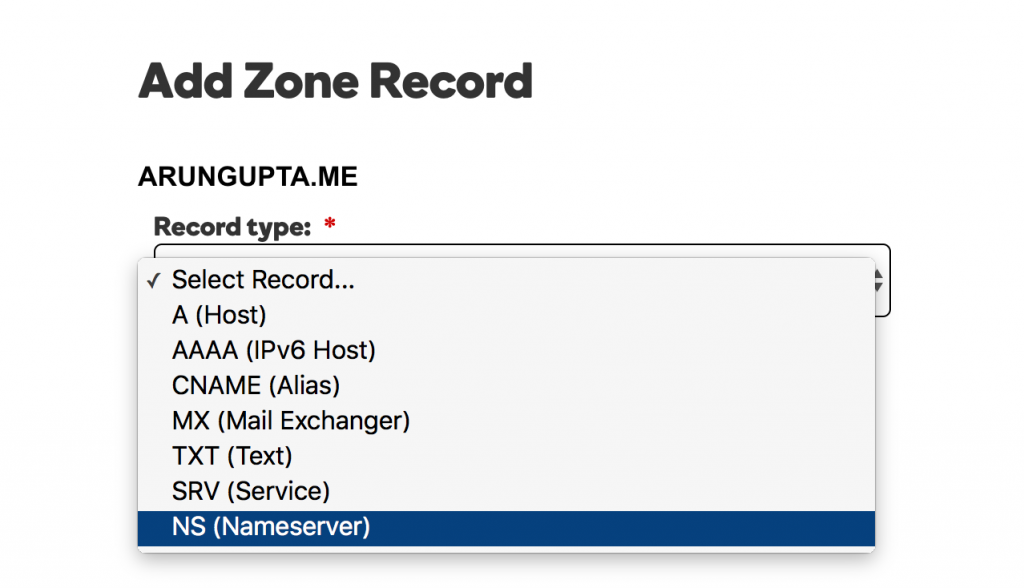

kubernetes.arungupta.meis hosted on GoDaddy. For each value shown in the Value column of Route53 hosted zone, create a NS record using GoDaddy Domain Control Center for this domain.Select the type of record:

For each value, add the record as shown:

For each value, add the record as shown: Completed set of records look like:

Completed set of records look like:

Start Kubernetes Multimaster Cluster

Let’s understand a bit about Amazon regions and zones:

Amazon EC2 is hosted in multiple locations world-wide. These locations are composed of regions and Availability Zones. Each region is a separate geographic area. Each region has multiple, isolated locations known as Availability Zones.

A highly-available Kubernetes cluster can be created across zones but not across regions.

- Find out availability zones within a region:

12345678910111213141516171819202122232425aws ec2 describe-availability-zones --region us-west-2{"AvailabilityZones": [{"State": "available","RegionName": "us-west-2","Messages": [],"ZoneName": "us-west-2a"},{"State": "available","RegionName": "us-west-2","Messages": [],"ZoneName": "us-west-2b"},{"State": "available","RegionName": "us-west-2","Messages": [],"ZoneName": "us-west-2c"}]} - Create a multi-master cluster:

Most of the switches are self-explanatory. Some switches need a bit of explanation:123kops-darwin-amd64 create cluster --name=kubernetes.arungupta.me --cloud=aws --zones=us-west-2a,us-west-2b,us-west-2c --master-size=m4.large --node-count=3 --node-size=m4.2xlarge --master-zones=us-west-2a,us-west-2b,us-west-2c --state=s3://kops-couchbase --yes- Specifying multiple zones using

--master-zones(must be odd number) create multiple masters across AZ --cloud=awsis optional if cloud can be inferred from zones--yesis used to specify the immediate creation of cluster. Otherwise only the state is stored in the bucket, and the cluster needs to be created separately.

Complete set of CLI switches can be seen:

123456789101112131415161718192021222324252627282930313233343536373839404142./kops-darwin-amd64 create cluster --helpCreates a k8s cluster.Usage:kops create cluster [flags]Flags:--admin-access string Restrict access to admin endpoints (SSH, HTTPS) to this CIDR. If not set, access will not be restricted by IP.--associate-public-ip Specify --associate-public-ip=[true|false] to enable/disable association of public IP for master ASG and nodes. Default is 'true'. (default true)--channel string Channel for default versions and configuration to use (default "stable")--cloud string Cloud provider to use - gce, aws--dns-zone string DNS hosted zone to use (defaults to last two components of cluster name)--image string Image to use--kubernetes-version string Version of kubernetes to run (defaults to version in channel)--master-size string Set instance size for masters--master-zones string Zones in which to run masters (must be an odd number)--model string Models to apply (separate multiple models with commas) (default "config,proto,cloudup")--network-cidr string Set to override the default network CIDR--networking string Networking mode to use. kubenet (default), classic, external. (default "kubenet")--node-count int Set the number of nodes--node-size string Set instance size for nodes--out string Path to write any local output--project string Project to use (must be set on GCE)--ssh-public-key string SSH public key to use (default "~/.ssh/id_rsa.pub")--target string Target - direct, terraform (default "direct")--vpc string Set to use a shared VPC--yes Specify --yes to immediately create the cluster--zones string Zones in which to run the clusterGlobal Flags:--alsologtostderr log to standard error as well as files--config string config file (default is $HOME/.kops.yaml)--log_backtrace_at traceLocation when logging hits line file:N, emit a stack trace (default :0)--log_dir string If non-empty, write log files in this directory--logtostderr log to standard error instead of files (default false)--name string Name of cluster--state string Location of state storage--stderrthreshold severity logs at or above this threshold go to stderr (default 2)-v, --v Level log level for V logs--vmodule moduleSpec comma-separated list of pattern=N settings for file-filtered logging - Specifying multiple zones using

- Once the cluster is created, get more details about the cluster:

1234567kubectl cluster-infoKubernetes master is running at https://api.kubernetes.arungupta.meKubeDNS is running at https://api.kubernetes.arungupta.me/api/v1/proxy/namespaces/kube-system/services/kube-dnsTo further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

- Check cluster client and server version:

12345kubectl versionClient Version: version.Info{Major:"1", Minor:"4", GitVersion:"v1.4.1", GitCommit:"33cf7b9acbb2cb7c9c72a10d6636321fb180b159", GitTreeState:"clean", BuildDate:"2016-10-10T18:19:49Z", GoVersion:"go1.7.1", Compiler:"gc", Platform:"darwin/amd64"}Server Version: version.Info{Major:"1", Minor:"4", GitVersion:"v1.4.3", GitCommit:"4957b090e9a4f6a68b4a40375408fdc74a212260", GitTreeState:"clean", BuildDate:"2016-10-16T06:20:04Z", GoVersion:"go1.6.3", Compiler:"gc", Platform:"linux/amd64"}

- Check all nodes in the cluster:

12345678910kubectl get nodesNAME STATUS AGEip-172-20-111-151.us-west-2.compute.internal Ready 1hip-172-20-116-40.us-west-2.compute.internal Ready 1hip-172-20-48-41.us-west-2.compute.internal Ready 1hip-172-20-49-105.us-west-2.compute.internal Ready 1hip-172-20-80-233.us-west-2.compute.internal Ready 1hip-172-20-82-93.us-west-2.compute.internal Ready 1h

Or find out only the master nodes:

1234567kubectl get nodes -l kubernetes.io/role=masterNAME STATUS AGEip-172-20-111-151.us-west-2.compute.internal Ready 1hip-172-20-48-41.us-west-2.compute.internal Ready 1hip-172-20-82-93.us-west-2.compute.internal Ready 1h - Check all the clusters:

12345kops-darwin-amd64 get clusters --state=s3://kops-couchbaseNAME CLOUD ZONESkubernetes.arungupta.me aws us-west-2a,us-west-2b,us-west-2c

Kubernetes Dashboard Addon

By default, a cluster created using kops does not have the UI dashboard. But this can be added as an add on:

|

1

2

3

4

5

|

kubectl create -f https://raw.githubusercontent.com/kubernetes/kops/master/addons/kubernetes-dashboard/v1.4.0.yaml

deployment "kubernetes-dashboard-v1.4.0" created

service "kubernetes-dashboard" created

|

Now complete details about the cluster can be seen:

|

1

2

3

4

5

6

7

8

|

kubectl cluster-info

Kubernetes master is running at https://api.kubernetes.arungupta.me

KubeDNS is running at https://api.kubernetes.arungupta.me/api/v1/proxy/namespaces/kube-system/services/kube-dns

kubernetes-dashboard is running at https://api.kubernetes.arungupta.me/api/v1/proxy/namespaces/kube-system/services/kubernetes-dashboard

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

|

And the Kubernetes UI dashboard is at the shown URL. In our case, this is https://api.kubernetes.arungupta.me/ui and looks like:

Credentials for accessing this dashboard can be obtained using the kubectl config view command. The values are shown like:

|

1

2

3

4

5

6

|

- name: kubernetes.arungupta.me-basic-auth

user:

password: PASSWORD

username: admin

|

Deploy Couchbase Service

As explained in Getting Started with Kubernetes 1.4 using Spring Boot and Couchbase, let’s run a Couchbase service:

|

1

2

3

4

5

|

kubectl create -f ~/workspaces/kubernetes-java-sample/maven/couchbase-service.yml

service "couchbase-service" created

replicationcontroller "couchbase-rc" created

|

This configuration file is at github.com/arun-gupta/kubernetes-java-sample/blob/master/maven/couchbase-service.yml.

Get the list of services:

|

1

2

3

4

5

6

|

kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

couchbase-service 100.65.4.139 <none> 8091/TCP,8092/TCP,8093/TCP,11210/TCP 27s

kubernetes 100.64.0.1 <none> 443/TCP 2h

|

Describe the service:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

kubectl describe svc/couchbase-service

Name: couchbase-service

Namespace: default

Labels: <none>

Selector: app=couchbase-rc-pod

Type: ClusterIP

IP: 100.65.4.139

Port: admin 8091/TCP

Endpoints: 100.96.5.2:8091

Port: views 8092/TCP

Endpoints: 100.96.5.2:8092

Port: query 8093/TCP

Endpoints: 100.96.5.2:8093

Port: memcached 11210/TCP

Endpoints: 100.96.5.2:11210

Session Affinity: None

|

Get the pods:

|

1

2

3

4

5

|

kubectl get pods

NAME READY STATUS RESTARTS AGE

couchbase-rc-e35v5 1/1 Running 0 1m

|

Run Spring Boot Application

The Spring Boot application runs against the Couchbase service and stores a JSON document in it.

Start the Spring Boot application:

|

1

2

3

4

|

kubectl create -f ~/workspaces/kubernetes-java-sample/maven/bootiful-couchbase.yml

job "bootiful-couchbase" created

|

This configuration file is at github.com/arun-gupta/kubernetes-java-sample/blob/master/maven/bootiful-couchbase.yml.

See list of all the pods:

|

1

2

3

4

5

6

|

kubectl get pods --show-all

NAME READY STATUS RESTARTS AGE

bootiful-couchbase-ainv8 0/1 Completed 0 1m

couchbase-rc-e35v5 1/1 Running 0 3m

|

Check logs of the complete pod:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

kubectl logs bootiful-couchbase-ainv8

. ____ _ __ _ _

/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

\\/ ___)| |_)| | | | | || (_| | ) ) ) )

' |____| .__|_| |_|_| |_\__, | / / / /

=========|_|==============|___/=/_/_/_/

:: Spring Boot :: (v1.4.0.RELEASE)

2016-11-02 18:48:56.035 INFO 7 --- [ main] org.example.webapp.Application : Starting Application v1.0-SNAPSHOT on bootiful-couchbase-ainv8 with PID 7 (/maven/bootiful-couchbase.jar started by root in /)

2016-11-02 18:48:56.040 INFO 7 --- [ main] org.example.webapp.Application : No active profile set, falling back to default profiles: default

2016-11-02 18:48:56.115 INFO 7 --- [ main] s.c.a.AnnotationConfigApplicationContext : Refreshing org.springframework.context.annotation.AnnotationConfigApplicationContext@108c4c35: startup date [Wed Nov 02 18:48:56 UTC 2016]; root of context hierarchy

2016-11-02 18:48:57.021 INFO 7 --- [ main] com.couchbase.client.core.CouchbaseCore : CouchbaseEnvironment: {sslEnabled=false, sslKeystoreFile='null', sslKeystorePassword='null', queryEnabled=false, queryPort=8093, bootstrapHttpEnabled=true, bootstrapCarrierEnabled=true, bootstrapHttpDirectPort=8091, bootstrapHttpSslPort=18091, bootstrapCarrierDirectPort=11210, bootstrapCarrierSslPort=11207, ioPoolSize=8, computationPoolSize=8, responseBufferSize=16384, requestBufferSize=16384, kvServiceEndpoints=1, viewServiceEndpoints=1, queryServiceEndpoints=1, searchServiceEndpoints=1, ioPool=NioEventLoopGroup, coreScheduler=CoreScheduler, eventBus=DefaultEventBus, packageNameAndVersion=couchbase-java-client/2.2.8 (git: 2.2.8, core: 1.2.9), dcpEnabled=false, retryStrategy=BestEffort, maxRequestLifetime=75000, retryDelay=ExponentialDelay{growBy 1.0 MICROSECONDS, powers of 2; lower=100, upper=100000}, reconnectDelay=ExponentialDelay{growBy 1.0 MILLISECONDS, powers of 2; lower=32, upper=4096}, observeIntervalDelay=ExponentialDelay{growBy 1.0 MICROSECONDS, powers of 2; lower=10, upper=100000}, keepAliveInterval=30000, autoreleaseAfter=2000, bufferPoolingEnabled=true, tcpNodelayEnabled=true, mutationTokensEnabled=false, socketConnectTimeout=1000, dcpConnectionBufferSize=20971520, dcpConnectionBufferAckThreshold=0.2, dcpConnectionName=dcp/core-io, callbacksOnIoPool=false, queryTimeout=7500, viewTimeout=7500, kvTimeout=2500, connectTimeout=5000, disconnectTimeout=25000, dnsSrvEnabled=false}

2016-11-02 18:48:57.245 INFO 7 --- [ cb-io-1-1] com.couchbase.client.core.node.Node : Connected to Node couchbase-service

2016-11-02 18:48:57.291 INFO 7 --- [ cb-io-1-1] com.couchbase.client.core.node.Node : Disconnected from Node couchbase-service

2016-11-02 18:48:57.533 INFO 7 --- [ cb-io-1-2] com.couchbase.client.core.node.Node : Connected to Node couchbase-service

2016-11-02 18:48:57.638 INFO 7 --- [-computations-4] c.c.c.core.config.ConfigurationProvider : Opened bucket books

2016-11-02 18:48:58.152 INFO 7 --- [ main] o.s.j.e.a.AnnotationMBeanExporter : Registering beans for JMX exposure on startup

Book{isbn=978-1-4919-1889-0, name=Minecraft Modding with Forge, cost=29.99}

2016-11-02 18:48:58.402 INFO 7 --- [ main] org.example.webapp.Application : Started Application in 2.799 seconds (JVM running for 3.141)

2016-11-02 18:48:58.403 INFO 7 --- [ Thread-5] s.c.a.AnnotationConfigApplicationContext : Closing org.springframework.context.annotation.AnnotationConfigApplicationContext@108c4c35: startup date [Wed Nov 02 18:48:56 UTC 2016]; root of context hierarchy

2016-11-02 18:48:58.404 INFO 7 --- [ Thread-5] o.s.j.e.a.AnnotationMBeanExporter : Unregistering JMX-exposed beans on shutdown

2016-11-02 18:48:58.410 INFO 7 --- [ cb-io-1-2] com.couchbase.client.core.node.Node : Disconnected from Node couchbase-service

2016-11-02 18:48:58.410 INFO 7 --- [ Thread-5] c.c.c.core.config.ConfigurationProvider : Closed bucket books

|

The updated dashboard now looks like:

Delete the Kubernetes Cluster

Kubernetes cluster can be deleted as:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

|

kops-darwin-amd64 delete cluster --name=kubernetes.arungupta.me --state=s3://kops-couchbase --yes

TYPE NAME ID

autoscaling-config master-us-west-2a.masters.kubernetes.arungupta.me-20161101235639 master-us-west-2a.masters.kubernetes.arungupta.me-20161101235639

autoscaling-config master-us-west-2b.masters.kubernetes.arungupta.me-20161101235639 master-us-west-2b.masters.kubernetes.arungupta.me-20161101235639

autoscaling-config master-us-west-2c.masters.kubernetes.arungupta.me-20161101235639 master-us-west-2c.masters.kubernetes.arungupta.me-20161101235639

autoscaling-config nodes.kubernetes.arungupta.me-20161101235639 nodes.kubernetes.arungupta.me-20161101235639

autoscaling-group master-us-west-2a.masters.kubernetes.arungupta.me master-us-west-2a.masters.kubernetes.arungupta.me

autoscaling-group master-us-west-2b.masters.kubernetes.arungupta.me master-us-west-2b.masters.kubernetes.arungupta.me

autoscaling-group master-us-west-2c.masters.kubernetes.arungupta.me master-us-west-2c.masters.kubernetes.arungupta.me

autoscaling-group nodes.kubernetes.arungupta.me nodes.kubernetes.arungupta.me

dhcp-options kubernetes.arungupta.me dopt-9b7b08ff

iam-instance-profile masters.kubernetes.arungupta.me masters.kubernetes.arungupta.me

iam-instance-profile nodes.kubernetes.arungupta.me nodes.kubernetes.arungupta.me

iam-role masters.kubernetes.arungupta.me masters.kubernetes.arungupta.me

iam-role nodes.kubernetes.arungupta.me nodes.kubernetes.arungupta.me

instance master-us-west-2a.masters.kubernetes.arungupta.me i-8798eb9f

instance master-us-west-2b.masters.kubernetes.arungupta.me i-eca96ab3

instance master-us-west-2c.masters.kubernetes.arungupta.me i-63fd3dbf

instance nodes.kubernetes.arungupta.me i-21a96a7e

instance nodes.kubernetes.arungupta.me i-57fb3b8b

instance nodes.kubernetes.arungupta.me i-5c99ea44

internet-gateway kubernetes.arungupta.me igw-b624abd2

keypair kubernetes.kubernetes.arungupta.me-18:90:41:6f:5f:79:6a:a8:d5:b6:b8:3f:10:d5:d3:f3 kubernetes.kubernetes.arungupta.me-18:90:41:6f:5f:79:6a:a8:d5:b6:b8:3f:10:d5:d3:f3

route-table kubernetes.arungupta.me rtb-e44df183

route53-record api.internal.kubernetes.arungupta.me. Z6I41VJM5VCZV/api.internal.kubernetes.arungupta.me.

route53-record api.kubernetes.arungupta.me. Z6I41VJM5VCZV/api.kubernetes.arungupta.me.

route53-record etcd-events-us-west-2a.internal.kubernetes.arungupta.me. Z6I41VJM5VCZV/etcd-events-us-west-2a.internal.kubernetes.arungupta.me.

route53-record etcd-events-us-west-2b.internal.kubernetes.arungupta.me. Z6I41VJM5VCZV/etcd-events-us-west-2b.internal.kubernetes.arungupta.me.

route53-record etcd-events-us-west-2c.internal.kubernetes.arungupta.me. Z6I41VJM5VCZV/etcd-events-us-west-2c.internal.kubernetes.arungupta.me.

route53-record etcd-us-west-2a.internal.kubernetes.arungupta.me. Z6I41VJM5VCZV/etcd-us-west-2a.internal.kubernetes.arungupta.me.

route53-record etcd-us-west-2b.internal.kubernetes.arungupta.me. Z6I41VJM5VCZV/etcd-us-west-2b.internal.kubernetes.arungupta.me.

route53-record etcd-us-west-2c.internal.kubernetes.arungupta.me. Z6I41VJM5VCZV/etcd-us-west-2c.internal.kubernetes.arungupta.me.

security-group masters.kubernetes.arungupta.me sg-3e790f47

security-group nodes.kubernetes.arungupta.me sg-3f790f46

subnet us-west-2a.kubernetes.arungupta.me subnet-3cdbc958

subnet us-west-2b.kubernetes.arungupta.me subnet-18c3f76e

subnet us-west-2c.kubernetes.arungupta.me subnet-b30f6deb

volume us-west-2a.etcd-events.kubernetes.arungupta.me vol-202350a8

volume us-west-2a.etcd-main.kubernetes.arungupta.me vol-0a235082

volume us-west-2b.etcd-events.kubernetes.arungupta.me vol-401f5bf4

volume us-west-2b.etcd-main.kubernetes.arungupta.me vol-691f5bdd

volume us-west-2c.etcd-events.kubernetes.arungupta.me vol-aefe163b

volume us-west-2c.etcd-main.kubernetes.arungupta.me vol-e9fd157c

vpc kubernetes.arungupta.me vpc-e5f50382

internet-gateway:igw-b624abd2 still has dependencies, will retry

keypair:kubernetes.kubernetes.arungupta.me-18:90:41:6f:5f:79:6a:a8:d5:b6:b8:3f:10:d5:d3:f3 ok

instance:i-5c99ea44 ok

instance:i-63fd3dbf ok

instance:i-eca96ab3 ok

instance:i-21a96a7e ok

autoscaling-group:master-us-west-2a.masters.kubernetes.arungupta.me ok

autoscaling-group:master-us-west-2b.masters.kubernetes.arungupta.me ok

autoscaling-group:master-us-west-2c.masters.kubernetes.arungupta.me ok

autoscaling-group:nodes.kubernetes.arungupta.me ok

iam-instance-profile:nodes.kubernetes.arungupta.me ok

iam-instance-profile:masters.kubernetes.arungupta.me ok

instance:i-57fb3b8b ok

instance:i-8798eb9f ok

route53-record:Z6I41VJM5VCZV/etcd-events-us-west-2a.internal.kubernetes.arungupta.me. ok

iam-role:nodes.kubernetes.arungupta.me ok

iam-role:masters.kubernetes.arungupta.me ok

autoscaling-config:nodes.kubernetes.arungupta.me-20161101235639 ok

autoscaling-config:master-us-west-2b.masters.kubernetes.arungupta.me-20161101235639 ok

subnet:subnet-b30f6deb still has dependencies, will retry

subnet:subnet-3cdbc958 still has dependencies, will retry

subnet:subnet-18c3f76e still has dependencies, will retry

autoscaling-config:master-us-west-2a.masters.kubernetes.arungupta.me-20161101235639 ok

autoscaling-config:master-us-west-2c.masters.kubernetes.arungupta.me-20161101235639 ok

volume:vol-0a235082 still has dependencies, will retry

volume:vol-202350a8 still has dependencies, will retry

volume:vol-401f5bf4 still has dependencies, will retry

volume:vol-e9fd157c still has dependencies, will retry

volume:vol-aefe163b still has dependencies, will retry

volume:vol-691f5bdd still has dependencies, will retry

security-group:sg-3f790f46 still has dependencies, will retry

security-group:sg-3e790f47 still has dependencies, will retry

Not all resources deleted; waiting before reattempting deletion

internet-gateway:igw-b624abd2

security-group:sg-3f790f46

volume:vol-aefe163b

route-table:rtb-e44df183

volume:vol-401f5bf4

subnet:subnet-18c3f76e

security-group:sg-3e790f47

volume:vol-691f5bdd

subnet:subnet-3cdbc958

volume:vol-202350a8

volume:vol-0a235082

dhcp-options:dopt-9b7b08ff

subnet:subnet-b30f6deb

volume:vol-e9fd157c

vpc:vpc-e5f50382

internet-gateway:igw-b624abd2 still has dependencies, will retry

volume:vol-e9fd157c still has dependencies, will retry

subnet:subnet-3cdbc958 still has dependencies, will retry

subnet:subnet-18c3f76e still has dependencies, will retry

subnet:subnet-b30f6deb still has dependencies, will retry

volume:vol-0a235082 still has dependencies, will retry

volume:vol-aefe163b still has dependencies, will retry

volume:vol-691f5bdd still has dependencies, will retry

volume:vol-202350a8 still has dependencies, will retry

volume:vol-401f5bf4 still has dependencies, will retry

security-group:sg-3f790f46 still has dependencies, will retry

security-group:sg-3e790f47 still has dependencies, will retry

Not all resources deleted; waiting before reattempting deletion

subnet:subnet-b30f6deb

volume:vol-e9fd157c

vpc:vpc-e5f50382

internet-gateway:igw-b624abd2

security-group:sg-3f790f46

volume:vol-aefe163b

route-table:rtb-e44df183

volume:vol-401f5bf4

subnet:subnet-18c3f76e

security-group:sg-3e790f47

volume:vol-691f5bdd

subnet:subnet-3cdbc958

volume:vol-202350a8

volume:vol-0a235082

dhcp-options:dopt-9b7b08ff

subnet:subnet-18c3f76e still has dependencies, will retry

subnet:subnet-b30f6deb still has dependencies, will retry

internet-gateway:igw-b624abd2 still has dependencies, will retry

subnet:subnet-3cdbc958 still has dependencies, will retry

volume:vol-691f5bdd still has dependencies, will retry

volume:vol-0a235082 still has dependencies, will retry

volume:vol-202350a8 still has dependencies, will retry

volume:vol-401f5bf4 still has dependencies, will retry

volume:vol-aefe163b still has dependencies, will retry

volume:vol-e9fd157c still has dependencies, will retry

security-group:sg-3e790f47 still has dependencies, will retry

security-group:sg-3f790f46 still has dependencies, will retry

Not all resources deleted; waiting before reattempting deletion

internet-gateway:igw-b624abd2

security-group:sg-3f790f46

volume:vol-aefe163b

route-table:rtb-e44df183

volume:vol-401f5bf4

subnet:subnet-18c3f76e

security-group:sg-3e790f47

volume:vol-691f5bdd

subnet:subnet-3cdbc958

volume:vol-202350a8

volume:vol-0a235082

dhcp-options:dopt-9b7b08ff

subnet:subnet-b30f6deb

volume:vol-e9fd157c

vpc:vpc-e5f50382

subnet:subnet-b30f6deb still has dependencies, will retry

volume:vol-202350a8 still has dependencies, will retry

internet-gateway:igw-b624abd2 still has dependencies, will retry

subnet:subnet-18c3f76e still has dependencies, will retry

volume:vol-e9fd157c still has dependencies, will retry

volume:vol-aefe163b still has dependencies, will retry

volume:vol-401f5bf4 still has dependencies, will retry

volume:vol-691f5bdd still has dependencies, will retry

security-group:sg-3e790f47 still has dependencies, will retry

security-group:sg-3f790f46 still has dependencies, will retry

subnet:subnet-3cdbc958 still has dependencies, will retry

volume:vol-0a235082 still has dependencies, will retry

Not all resources deleted; waiting before reattempting deletion

internet-gateway:igw-b624abd2

security-group:sg-3f790f46

volume:vol-aefe163b

route-table:rtb-e44df183

subnet:subnet-18c3f76e

security-group:sg-3e790f47

volume:vol-691f5bdd

volume:vol-401f5bf4

volume:vol-202350a8

subnet:subnet-3cdbc958

volume:vol-0a235082

dhcp-options:dopt-9b7b08ff

subnet:subnet-b30f6deb

volume:vol-e9fd157c

vpc:vpc-e5f50382

subnet:subnet-18c3f76e ok

volume:vol-e9fd157c ok

volume:vol-401f5bf4 ok

volume:vol-0a235082 ok

volume:vol-691f5bdd ok

subnet:subnet-3cdbc958 ok

volume:vol-aefe163b ok

subnet:subnet-b30f6deb ok

internet-gateway:igw-b624abd2 ok

volume:vol-202350a8 ok

security-group:sg-3f790f46 ok

security-group:sg-3e790f47 ok

route-table:rtb-e44df183 ok

vpc:vpc-e5f50382 ok

dhcp-options:dopt-9b7b08ff ok

Cluster deleted

|

couchbase.com/containers provide more details about how to run Couchbase in different container frameworks.

More information about Couchbase:

- Couchbase Developer Portal

- Couchbase Forums

- @couchbasedev or @couchbase

Source: blog.couchbase.com/2016/november/multimaster-kubernetes-cluster-amazon-kops

Most of the cards game addicted player were looking for the best card game to play so just from our website play free online euchre games with all unlocked level which you will play without any pay and app installation the goal in the euchre is really challenging that’s the reason that the people loves to play euchre online.