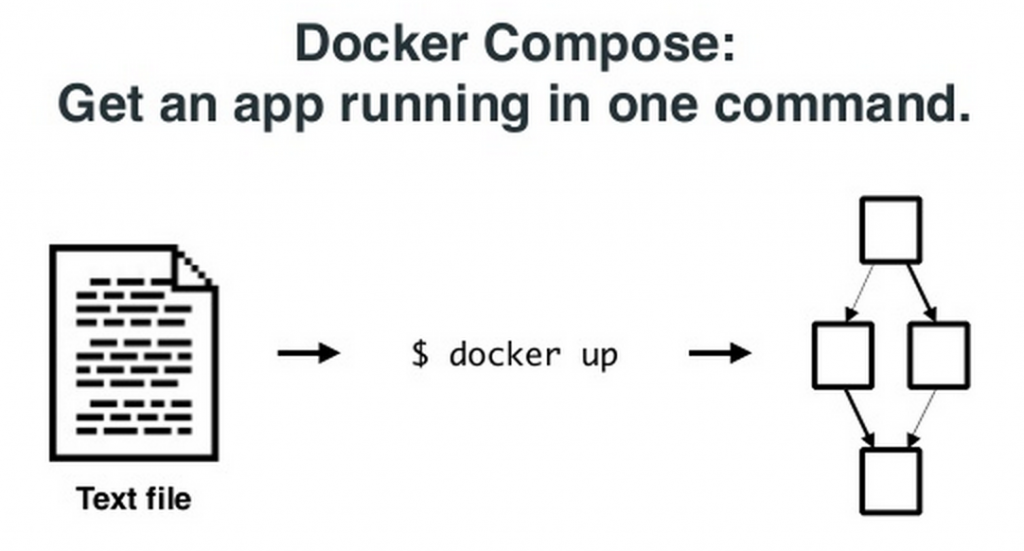

Docker 1.13 introduced a new version of Docker Compose. The main feature of this release is that it allow services defined using Docker Compose files to be directly deployed to Docker Engine enabled with Swarm mode. This enables simplified deployment of multi-container application on multi-host.

This blog will show use a simple Docker Compose file to show how services are created and deployed in Docker 1.13.

Here is a Docker Compose v2 definition for starting a Couchbase database node:

|

1

2

3

4

5

6

7

8

9

10

11

|

version: "2"

services:

db:

image: arungupta/couchbase:latest

ports:

- 8091:8091

- 8092:8092

- 8093:8093

- 11210:11210

|

This definition can be started on a Docker Engine without Swarm mode as:

|

1

2

3

|

docker-compose up

|

This will start a single replica of the service define in the Compose file. This service can be scaled as:

|

1

2

3

|

docker-compose scale db=2

|

If the ports are not exposed then this would work fine on a single host. If swarm mode is enabled on on Docker Engine, then it shows the message:

|

1

2

3

4

5

6

7

|

WARNING: The Docker Engine you're using is running in swarm mode.

Compose does not use swarm mode to deploy services to multiple nodes in a swarm. All containers will be scheduled on the current node.

To deploy your application across the swarm, use `docker stack deploy`.

|

Docker Compose gives us multi-container applications but the applications are still restricted to a single host. And that is a single point of failure.

Swarm mode allows to create a cluster of Docker Engines. With 1.13, docker stack deploy command can be used to deploy a Compose file to Swarm mode.

Here is a Docker Compose v3 definition:

|

1

2

3

4

5

6

7

8

9

10

11

|

version: "3"

services:

db:

image: arungupta/couchbase:latest

ports:

- 8091:8091

- 8092:8092

- 8093:8093

- 11210:11210

|

As you can see, the only change is the value of version attribute. There are other changes in Docker Compose v3. Also, read about different Docker Compose versions and how to upgrade from v2 to v3.

Enable swarm mode:

|

1

2

3

|

docker swarm init

|

Other nodes can join this swarm cluster and this would easily allow to deploy the multi-container application to a multi-host as well.

Deploy the services defined in Compose file as:

|

1

2

3

|

docker stack deploy --compose-file=docker-compose.yml couchbase

|

A default value of Compose file here would make the command a bit shorter. #30352 should take care of that.

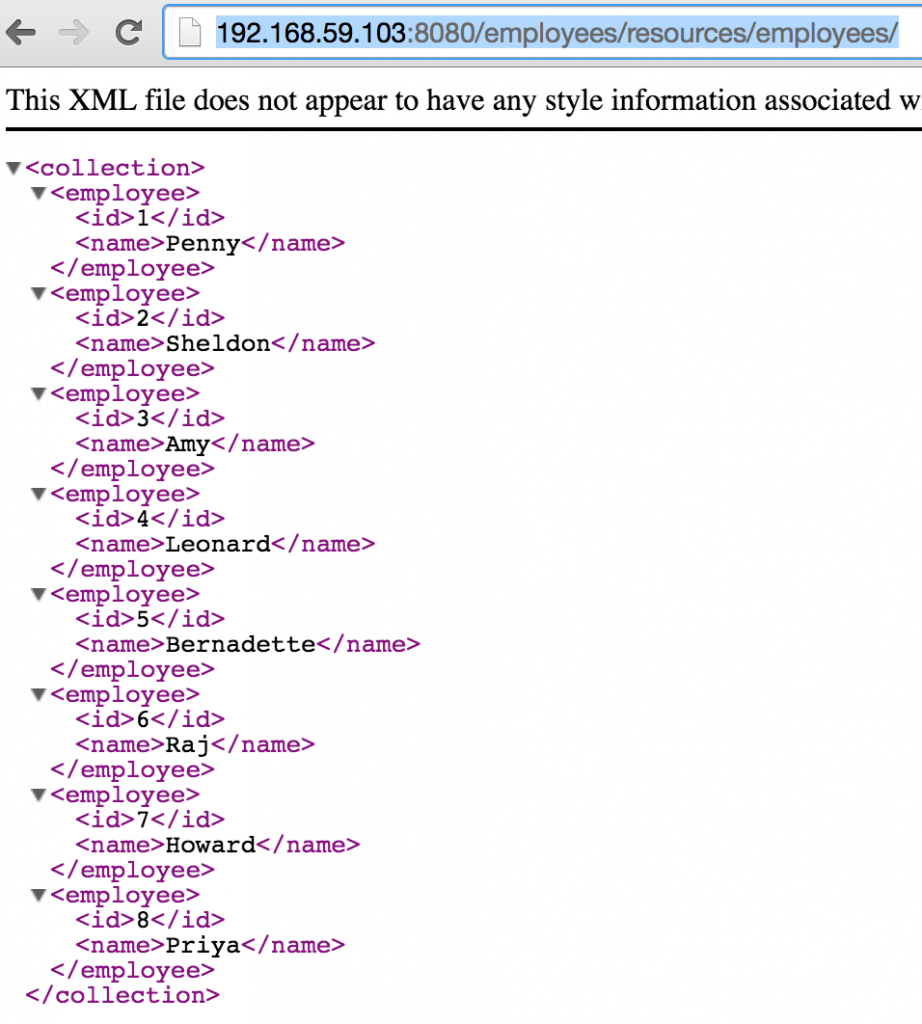

List of services running can be verified using docker service ls command:

|

1

2

3

4

|

ID NAME MODE REPLICAS IMAGE

05wa4y2he9w5 couchbase_db replicated 1/1 arungupta/couchbase:latest

|

The list of containers running within the service can be seen using docker service ps command:

|

1

2

3

4

|

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

rchu2uykeuuj couchbase_db.1 arungupta/couchbase:latest moby Running Running 52 seconds ago

|

In this case, a single container is running as part of the service. The node is listed as moby which is the default name of Docker Engine running using Docker for Mac.

The service can now be scaled as:

|

1

2

3

|

docker service scale couchbase_db=2

|

The list of container can then be seen again as:

|

1

2

3

4

5

|

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

rchu2uykeuuj couchbase_db.1 arungupta/couchbase:latest moby Running Running 3 minutes ago

kjy7l14weao8 couchbase_db.2 arungupta/couchbase:latest moby Running Running 23 seconds ago

|

Note that the containers are given the name using the format <service-name>_n. Both the containers are running on the same host.

Also note, the two containers are independent Couchbase nodes and are not configured in a cluster yet. This has already been explained at Couchbase Cluster using Docker and a refresh of the steps is coming soon.

A service will typically have multiple containers running spread across multiple hosts. Docker 1.13 introduces a new command docker service logs <service-name> to stream the log of service across all the containers on all hosts to your console. In our case, this can be seen using the command docker service logs couchbase_db and looks like:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

|

couchbase_db.1.rchu2uykeuuj@moby | ++ set -m

couchbase_db.1.rchu2uykeuuj@moby | ++ sleep 15

couchbase_db.1.rchu2uykeuuj@moby | ++ /entrypoint.sh couchbase-server

couchbase_db.2.kjy7l14weao8@moby | ++ set -m

couchbase_db.2.kjy7l14weao8@moby | ++ sleep 15

couchbase_db.1.rchu2uykeuuj@moby | Starting Couchbase Server -- Web UI available at http://:8091 and logs available in /opt/couchbase/var/lib/couchbase/logs

couchbase_db.1.rchu2uykeuuj@moby | ++ curl -v -X POST http://127.0.0.1:8091/pools/default -d memoryQuota=300 -d indexMemoryQuota=300

couchbase_db.2.kjy7l14weao8@moby | ++ /entrypoint.sh couchbase-server

couchbase_db.2.kjy7l14weao8@moby | Starting Couchbase Server -- Web UI available at http://:8091 and logs available in /opt/couchbase/var/lib/couchbase/logs

. . .

couchbase_db.1.rchu2uykeuuj@moby | ++ '[' '' = WORKER ']'

couchbase_db.2.kjy7l14weao8@moby | Content-Type: application/json

couchbase_db.1.rchu2uykeuuj@moby | ++ fg 1

couchbase_db.2.kjy7l14weao8@moby | Content-Length: 152

couchbase_db.1.rchu2uykeuuj@moby | /entrypoint.sh couchbase-server

couchbase_db.2.kjy7l14weao8@moby | Cache-Control: no-cache

couchbase_db.2.kjy7l14weao8@moby |

couchbase_db.2.kjy7l14weao8@moby | ++ echo 'Type: '

couchbase_db.2.kjy7l14weao8@moby | ++ '[' '' = WORKER ']'

couchbase_db.2.kjy7l14weao8@moby | ++ fg 1

couchbase_db.2.kjy7l14weao8@moby | {"storageMode":"memory_optimized","indexerThreads":0,"memorySnapshotInterval":200,"stableSnapshotInterval":5000,"maxRollbackPoints":5,"logLevel":"info"}Type:

couchbase_db.2.kjy7l14weao8@moby | /entrypoint.sh couchbase-server

|

The preamble of the log statement uses the format <container-name>.<container-id>@<host>. And then actual log message from your container shows up.

At first instance, attaching container id may seem redundant. But Docker services are self-healing. This means that if a container dies then the Docker Engine will start another container to ensure the specified number of replicas at a given time. This new container will have a new id. And thus it allows to attach the log message from the right container.

So a quick comparison of commands:

| Docker Compose v2 | Docker compose v3 | |

|---|---|---|

| Start services | docker-compose up -d |

docker stack deploy --compose-file=docker-compose.yml <stack-name> |

| Scale service | docker-compose scale <service>=<replicas> |

docker service scale <service>=<replicas> |

| Shutdown | docker-compose down |

docker stack rm <stack-name> |

| Multi-host | No | Yes |

Want to get started with Couchbase? Look at Couchbase Starter Kits.

Want to learn more about running Couchbase in containers?

- Couchbase on Containers

- Couchbase Forums

- Couchbase Developer Portal

- @couchhasedev and @couchbase

Source: https://blog.couchbase.com/2017/deploy-docker-compose-services-swarm