Java EE applications are typically built and packaged using Maven. For example, github.com/javaee-samples/javaee7-docker-maven is a trivial Java EE 7 application and shows the Java EE 7 dependency:

|

1

2

3

4

5

6

7

8

9

10

|

<dependencies>

<dependency>

<groupId>javax</groupId>

<artifactId>javaee-api</artifactId>

<version>7.0</version>

<scope>provided</scope>

</dependency>

</dependencies>

|

And the two Maven plugins that compiles the source and builds the WAR file:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.1</version>

<configuration>

<source>1.7</source>

<target>1.7</target>

</configuration>

</plugin>

<plugin>

<artifactId>maven-war-plugin</artifactId>

<version>2.3</version>

<configuration>

<failOnMissingWebXml>false</failOnMissingWebXml>

</configuration>

</plugin>

|

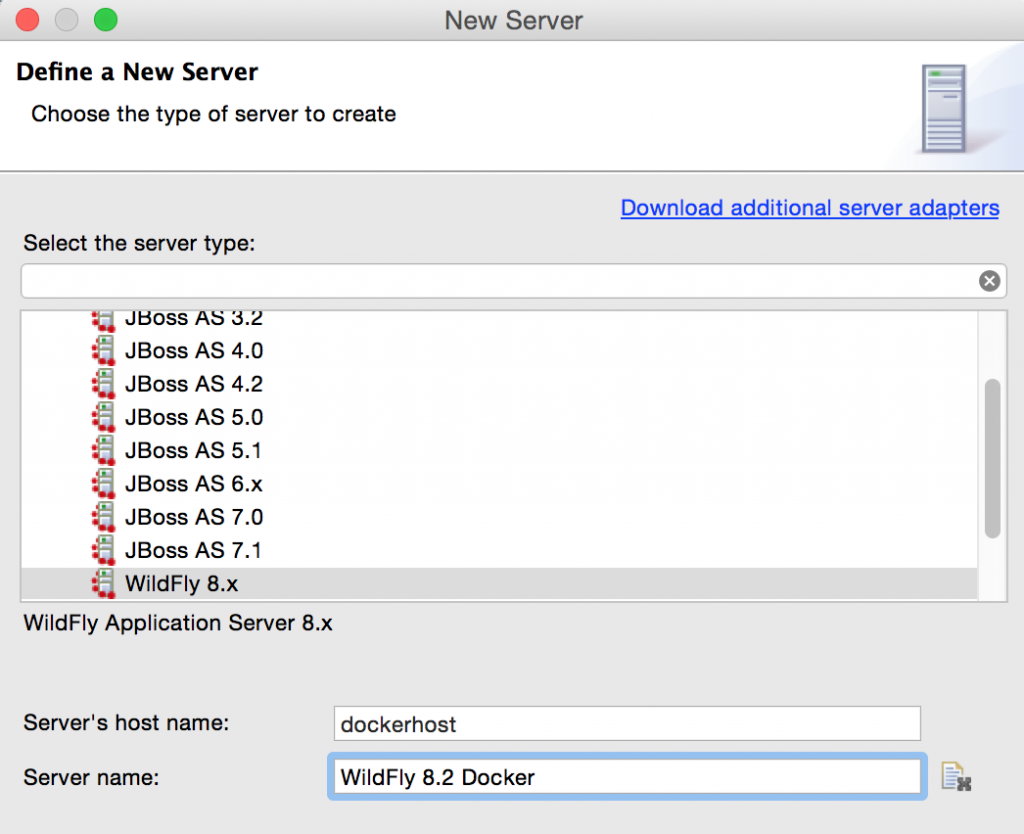

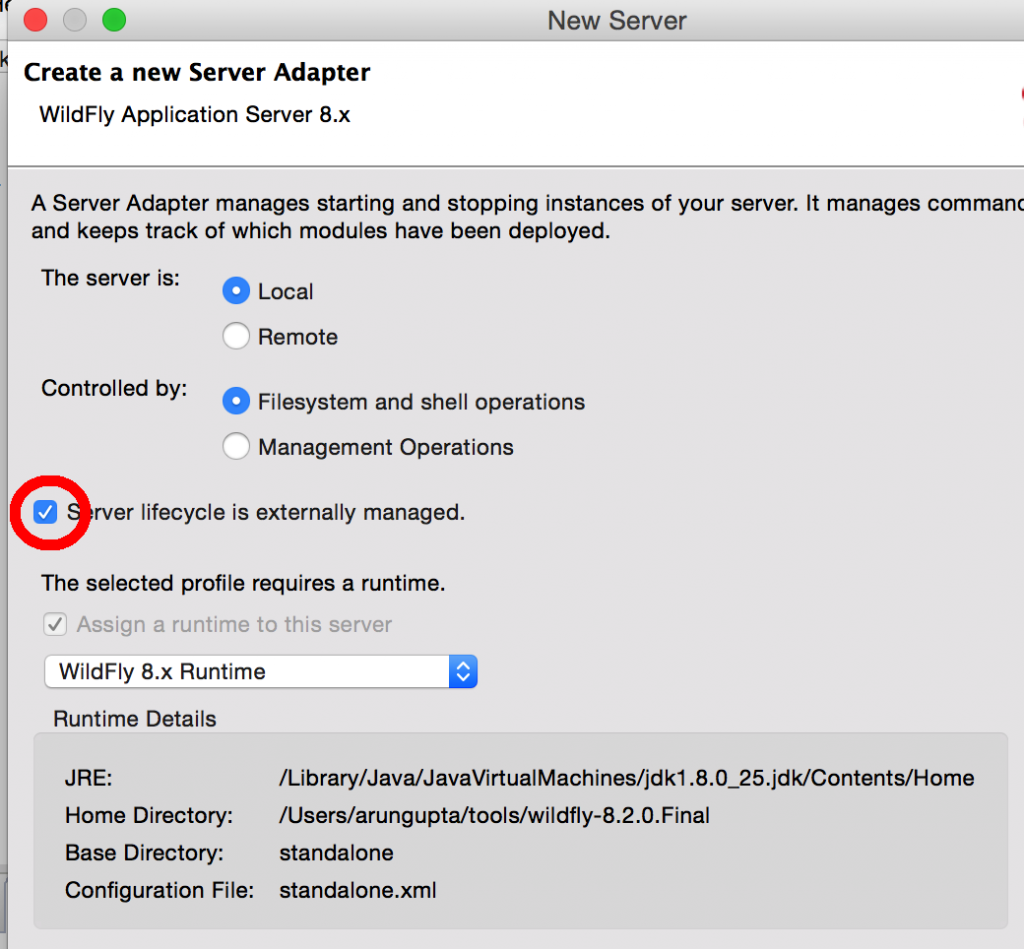

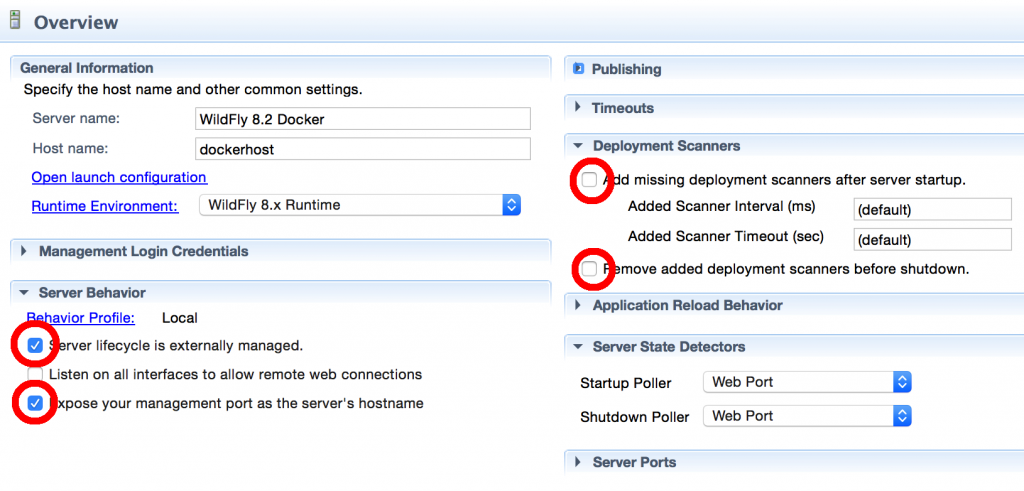

This application can then be deployed to a Java EE 7 container, such as WildFly, using the wildfly-maven-plugin:

|

1

2

3

4

5

6

7

|

<plugin>

<groupId>org.wildfly.plugins</groupId>

<artifactId>wildfly-maven-plugin</artifactId>

<version>1.0.2.Final</version>

</plugin>

|

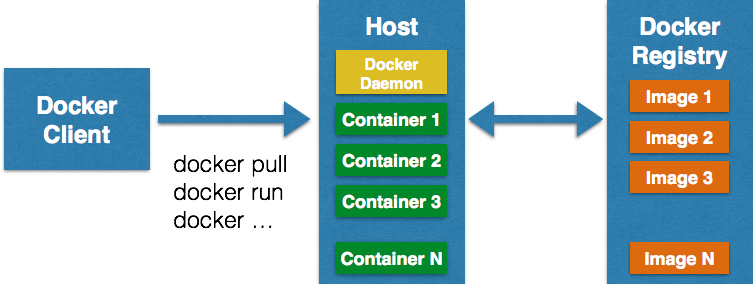

Tests can be invoked using Arquillian, again using Maven. So if you were to package this application as a Docker image and run it inside a Docker container, there should be a mechanism to seamlessly integrate in the Maven workflow.

Docker Maven Plugin

Meet docker-maven-plugin!

This plugin allows you to manage Docker images and containers from your pom.xml. It comes with predefined goals:

| Goal | Description |

|---|---|

docker:start |

Create and start containers |

docker:stop |

Stop and destroy containers |

docker:build |

Build images |

docker:push |

Push images to a registry |

docker:remove |

Remove images from local docker host |

docker:logs |

Show container logs |

Introduction provides a high level introduction to the plugin including building images, running containers and configuration.

Run Java EE 7 Application as Docker Container using Maven

TLDR;

- Create and Configure a Docker Machine as explained in Docker Machine to Setup Docker Host

- Clone the workspace as:

git clone https://github.com/javaee-samples/javaee7-docker-maven.git - Build the Docker image as:

mvn package -Pdocker - Run the Docker container as:

mvn install -Pdocker - Find out IP address of the Docker Machine as:

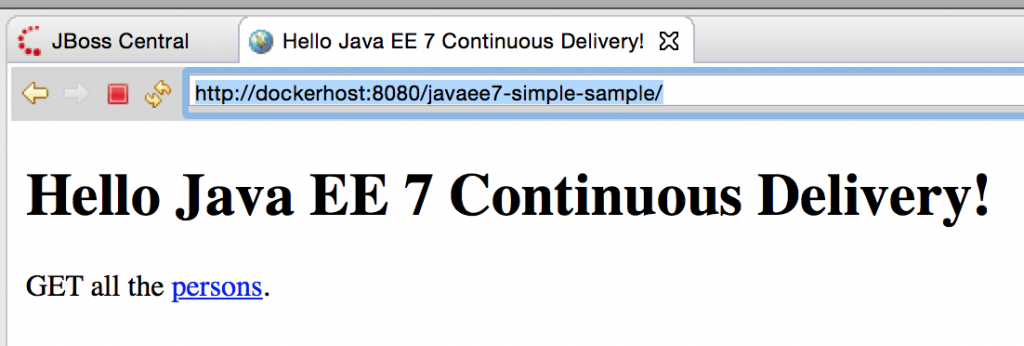

docker-machine ip mydocker - Access your application

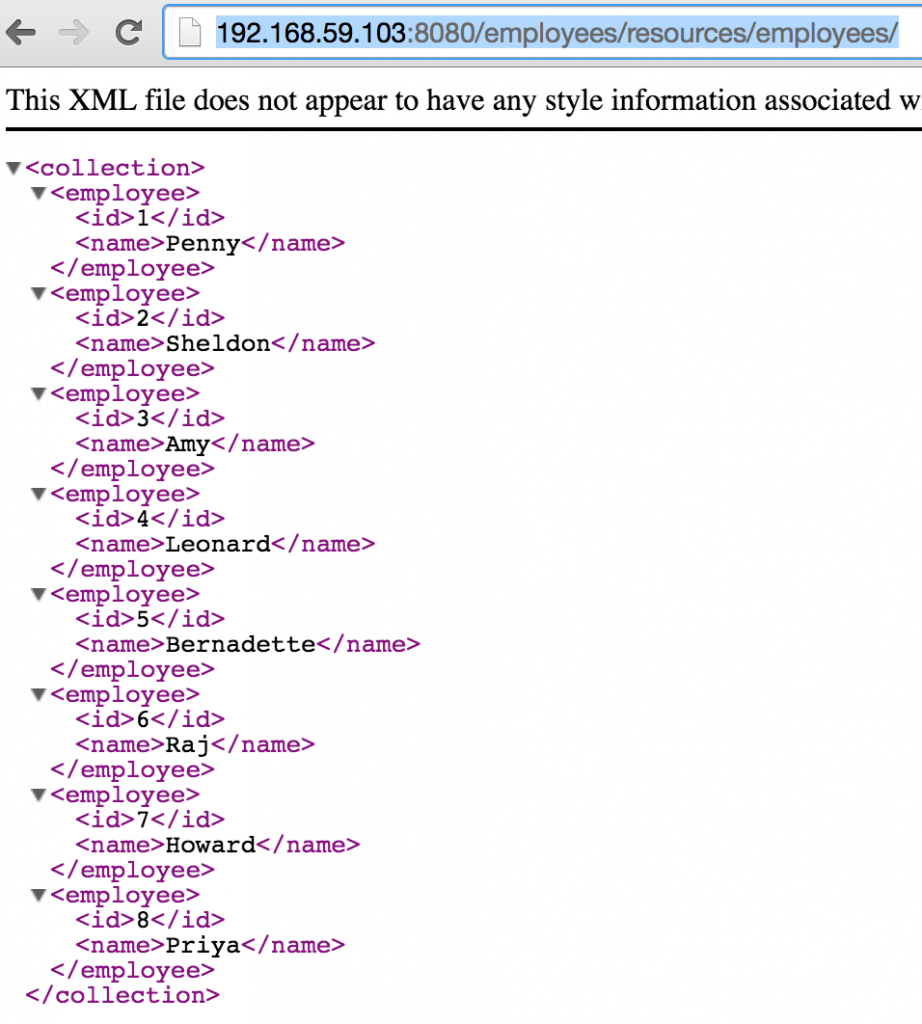

1234javaee7-docker-maven> curl http://192.168.99.100:8080/javaee7-docker-maven/resources/persons<?xml version="1.0" encoding="UTF-8" standalone="yes"?><collection><person><name>Penny</name></person><person><name>Leonard</name></person><person><name>Sheldon</name></person><person><name>Amy</name></person><person><name>Howard</name></person><person><name>Bernadette</name></person><person><name>Raj</name></person><person><name>Priya</name></person></collection>

Docker Maven Plugin Configuration

Lets look little deeper in our sample application.

pom.xml is updated to include docker-maven-plugin as:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

|

<plugin>

<groupId>org.jolokia</groupId>

<artifactId>docker-maven-plugin</artifactId>

<version>0.11.5</version>

<configuration>

<images>

<image>

<alias>user</alias>

<name>arungupta/javaee7-docker-maven</name>

<build>

<from>arungupta/wildfly:8.2</from>

<assembly>

<descriptor>assembly.xml</descriptor>

<basedir>/</basedir>

</assembly>

<ports>

<port>8080</port>

</ports>

</build>

<run>

<ports>

<port>8080:8080</port>

</ports>

</run>

</image>

</images>

</configuration>

<executions>

<execution>

<id>docker:build</id>

<phase>package</phase>

<goals>

<goal>build</goal>

</goals>

</execution>

<execution>

<id>docker:start</id>

<phase>install</phase>

<goals>

<goal>start</goal>

</goals>

</execution>

</executions>

</plugin>

|

Each image configuration has three parts:

- Image name and alias

-

<build>that defines how the image is created. Base image, build artifacts and their dependencies, ports to be exposed, etc to be included in the image are specified here.Assembly descriptor format is used to specify the artifacts to be included and is defined insrc/main/dockerdirectory.assembly.xmlin our case looks like:

1234567891011121314<assembly . . .><id>javaee7-docker-maven</id><dependencySets><dependencySet><includes><include>org.javaee7.sample:javaee7-docker-maven</include></includes><outputDirectory>/opt/jboss/wildfly/standalone/deployments/</outputDirectory><outputFileNameMapping>javaee7-docker-maven.war</outputFileNameMapping></dependencySet></dependencySets></assembly> <run>that defines how the container is run. Ports that need to be exposed are specified here.

In addition, package phase is tied to docker:build goal and install phase is tied to docker:start goal.

There are four docker-maven-plugin and you can read more details in the shootout on what serves your purpose the best.

How are you creating your Docker images from existing applications?

Enjoy!