Metadata, such as labels, can be attached to Docker daemon. A label is a key/value pair and allows the Docker host to be a target of containers. The semantics of labels is completely defined by the application. A new constraint can be specified during service creation targeting the tasks on a particular host.

Let’s see how we can use labels and constraints in Docker for a real-world application.

Couchbase using Multidimensional Scaling (or MDS) allows to split Index, Data, Query and Full-text search service on multiple nodes. The needs for each service is different. For example, Query is CPU heavy, Index is disk intensive and Data is a mix of memory and fast read/write, such as SSD.

MDS allows the hardware resources to be independently assigned and optimized on a per node basis, as application requirements change.

Read more about Multidimensional Scaling.

Let’s see how this can be easily accomplished in a three-node cluster using Docker swarm mode.

Start Ubuntu Instances

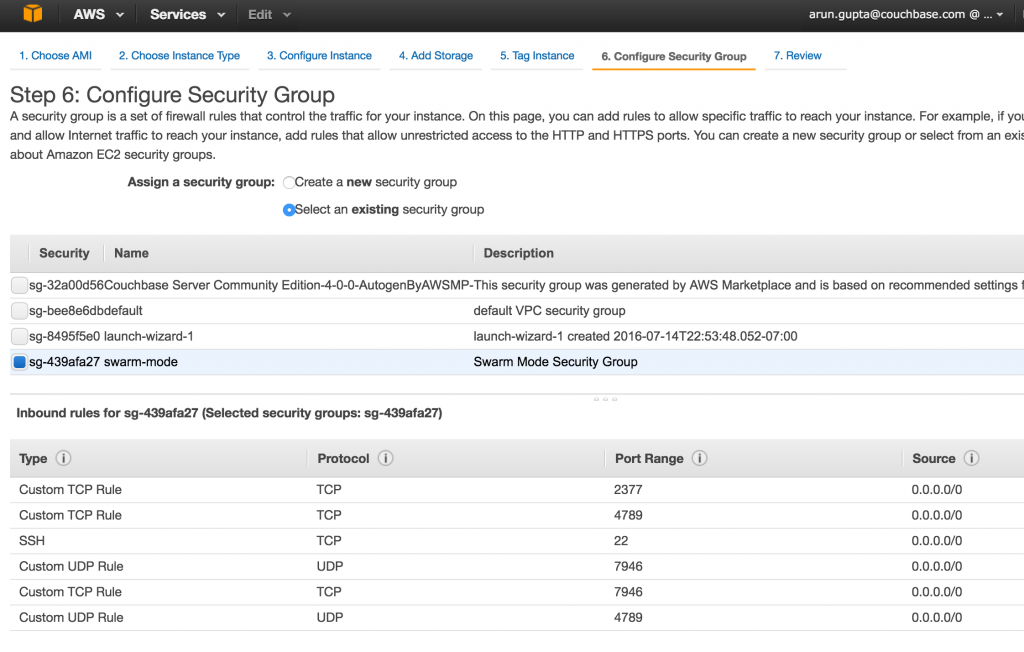

Start three instances on EC2 of Ubuntu Server 14.04 LTS (HVM) (AMI ID: ami-06116566). Take defaults in all cases except for the security group. Swarm mode requires the following three ports open between hosts:

- TCP port 2377 for cluster management communications

- TCP and UDP port 7946 for communication among nodes

- TCP and UDP port 4789 for overlay network traffic

Make sure to create a new security group with these rules:

Wait for a few minutes for the instances to be provisioned.

Set up Docker on Ubuntu

Swarm mode is introduced in Docker 1.12. At the time of this writing, 1.12 RC4 is the latest candidate. Use the following script to install the RC4 release with experimental features:

|

1

2

3

4

5

6

7

8

9

|

publicIp=`aws ec2 describe-instances --filters Name=instance-state-name,Values=running | jq -r .Reservations[].Instances[].PublicDnsName`

for node in $publicIp

do

ssh -o StrictHostKeyChecking=no -i ~/.ssh/aruncouchbase.pem ubuntu@$node 'curl -fsSL https://experimental.docker.com/ | sh'

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@$node 'sudo usermod -aG docker ubuntu'

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@$node 'docker version'

done

|

This script assumes that AWS CLI is already setup and performs the following configuration for all running instances in your configured EC2 account:

- Get public IP address of each instance

- For each instance

- Install latest Docker release with experimental features

- Adds

ubuntuuser to thedockergroup. This allows Docker to be used as a non-root user. - Prints the Docker version

This simple script will setup Docker host on all three instances.

Assign Labels to Docker Daemon

Labels can be defined using DOCKER_OPTS. For Ubuntu, this is defined in the /etc/default/docker file.

Distinct labels need to be assigned to each node. For example, use couchbase.mds key and index value.

|

1

2

3

4

5

6

|

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<public-ip-1> \

'sudo sed -i '/#DOCKER_OPTS/c\DOCKER_OPTS="--label=couchbase.mds=index"' /etc/default/docker';

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<public-ip> 'sudo restart docker'

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<public-ip> 'docker info';

|

You also need to restart Docker daemon. Finally, docker info displays system-wide information:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

|

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 1.12.0-rc4

Storage Driver: aufs

Root Dir: /var/lib/docker/aufs

Backing Filesystem: extfs

Dirs: 0

Dirperm1 Supported: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: null host bridge overlay

Swarm: inactive

Runtimes: runc

Default Runtime: runc

Security Options: apparmor

Kernel Version: 3.13.0-74-generic

Operating System: Ubuntu 14.04.3 LTS

OSType: linux

Architecture: x86_64

CPUs: 1

Total Memory: 992.5 MiB

Name: ip-172-31-14-15

ID: KISZ:RSMD:4YOZ:2FKL:GJTN:EVGC:U3GH:CHC3:XUJN:4UJ2:H3QF:GZFH

Docker Root Dir: /var/lib/docker

Debug Mode (client): false

Debug Mode (server): false

Registry: https://index.docker.io/v1/

Labels:

couchbase.mds=index

Experimental: true

Insecure Registries:

127.0.0.0/8

WARNING: No swap limit support

|

As you can see, labels are visible in this information.

For the second node, assign a different label:

|

1

2

3

4

5

6

|

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<public-ip-2> \

'sudo sed -i '/#DOCKER_OPTS/c\DOCKER_OPTS="--label=couchbase.mds=data"' /etc/default/docker';

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<public-ip> 'sudo restart docker'

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<public-ip> 'docker info';

|

Make sure to use the IP address of the second EC2 instance. The updated information about the Docker daemon in this case will be:

|

1

2

3

4

|

Labels:

couchbase.mds=data

|

And finally, the last node:

|

1

2

3

4

5

6

|

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<public-ip-3> \

'sudo sed -i '/#DOCKER_OPTS/c\DOCKER_OPTS="--label=couchbase.mds=query"' /etc/default/docker';

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<public-ip> 'sudo restart docker'

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<public-ip> 'docker info';

|

The updated information about the Docker daemon for this host will show:

|

1

2

3

4

|

Labels:

couchbase.mds=query

|

In our case, a homogenous cluster is created where machines are exactly alike, including their operating system, CPU, disk and memory capacity. In real world, you’ll typically have the same operating system but the instance capacity, such as disk, CPU and memory, would differ based upon what Couchbase services you want to run on them. These labels would make perfect sense in that case but they do show the point here.

Enable Swarm Mode and Create Cluster

Let’s enable Swarm Mode and create a cluster of 1 Manager and 2 Worker nodes. By default, manager are worker nodes as well.

Initialize Swarm on the first node:

|

1

2

3

|

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<public-ip> 'docker swarm init --secret mySecret --listen-addr <private-ip>:2377'

|

This will show the output:

|

1

2

3

4

5

6

7

8

|

Swarm initialized: current node (ezrf5ap238kpmyq5h0lf55hxi) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --secret mySecret \

--ca-hash sha256:ebda297c36a9d4c772f9e7867c453da42f69fe37cdfb1ba087f073051593a683 \

ip-172-31-14-15.us-west-1.compute.internal:2377

|

Add other two nodes as worker:

|

1

2

3

4

|

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<public-ip-2> 'docker swarm join --secret mySecret <master-internal-ip>:2377'

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<public-ip-3> 'docker swarm join --secret mySecret <master-internal-ip>:2377'

|

The exact commands, and output, in this case are:

|

1

2

3

4

5

|

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@ec2-54-153-101-215.us-west-1.compute.amazonaws.com 'docker swarm init --secret mySecret --listen-addr ip-172-31-14-15.us-west-1.compute.internal:2377'

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@ec2-52-53-223-255.us-west-1.compute.amazonaws.com 'docker swarm join --secret mySecret ip-172-31-14-15.us-west-1.compute.internal:2377'

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@ec2-52-53-251-64.us-west-1.compute.amazonaws.com 'docker swarm join --secret mySecret ip-172-31-14-15.us-west-1.compute.internal:2377'

|

Complete details about the cluster can now be obtained:

|

1

2

3

|

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@ec2-54-153-101-215.us-west-1.compute.amazonaws.com 'docker info'

|

And this shows the output:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

|

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 1.12.0-rc4

Storage Driver: aufs

Root Dir: /var/lib/docker/aufs

Backing Filesystem: extfs

Dirs: 0

Dirperm1 Supported: false

Logging Driver: json-file

Cgroup Driver: cgroupfs

Plugins:

Volume: local

Network: bridge null host overlay

Swarm: active

NodeID: ezrf5ap238kpmyq5h0lf55hxi

IsManager: Yes

Managers: 1

Nodes: 3

CACertHash: sha256:ebda297c36a9d4c772f9e7867c453da42f69fe37cdfb1ba087f073051593a683

Runtimes: runc

Default Runtime: runc

Security Options: apparmor

Kernel Version: 3.13.0-74-generic

Operating System: Ubuntu 14.04.3 LTS

OSType: linux

Architecture: x86_64

CPUs: 1

Total Memory: 992.5 MiB

Name: ip-172-31-14-15

ID: KISZ:RSMD:4YOZ:2FKL:GJTN:EVGC:U3GH:CHC3:XUJN:4UJ2:H3QF:GZFH

Docker Root Dir: /var/lib/docker

Debug Mode (client): false

Debug Mode (server): false

Registry: https://index.docker.io/v1/

Labels:

couchbase.mds=index

Experimental: true

Insecure Registries:

127.0.0.0/8

WARNING: No swap limit support

|

This shows that we’ve created a 3-node cluster with one manager.

Run Docker Service with Constraints

Now, we are going to run three Couchbase services with different constraints. Each service specifies constraint using --constraint engine.labels.<label> format where <label> matches the labels defined earlier for the nodes.

Each service is given a unique name as it allows to scale them individually. All commands are directed towards the Swarm manager:

|

1

2

3

4

5

|

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<master-public-ip> 'docker service create --name=cb-mds-index --constraint engine.labels.couchbase.mds==index couchbase'

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<master-public-ip> 'docker service create --name=cb-mds-data --constraint engine.labels.couchbase.mds==data couchbase'

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<master-public-ip> 'docker service create --name=cb-mds-query --constraint engine.labels.couchbase.mds==query couchbase'

|

The exact commands in our case are:

|

1

2

3

4

5

6

7

|

> ssh -i ~/.ssh/aruncouchbase.pem ubuntu@ec2-54-153-101-215.us-west-1.compute.amazonaws.com 'docker service create --name=cb-mds-index --constraint engine.labels.couchbase.mds==index couchbase'

34lcko519mvr32hxw2m8dwp5c

> ssh -i ~/.ssh/aruncouchbase.pem ubuntu@ec2-54-153-101-215.us-west-1.compute.amazonaws.com 'docker service create --name=cb-mds-data --constraint engine.labels.couchbase.mds==data couchbase'

0drcucii08tnx5sm9prug30m1

> ssh -i ~/.ssh/aruncouchbase.pem ubuntu@ec2-54-153-101-215.us-west-1.compute.amazonaws.com 'docker service create --name=cb-mds-query --constraint engine.labels.couchbase.mds==query couchbase'

|

The list of services can be verified as:

|

1

2

3

|

ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<master-public-ip> 'docker service ls'

|

This shows the output as:

|

1

2

3

4

5

6

|

ID NAME REPLICAS IMAGE COMMAND

0drcucii08tn cb-mds-data 1/1 couchbase

34lcko519mvr cb-mds-index 1/1 couchbase

bxjqjm6mashw cb-mds-query 1/1 couchbase

|

And the list of tasks (essentially containers within that service) for each service can then be verified as:

|

1

2

3

|

> ssh -i ~/.ssh/aruncouchbase.pem ubuntu@<master-public-ip> 'docker service tasks <service-name>'

|

And the output in our case:

|

1

2

3

4

5

6

7

8

9

10

11

|

> ssh -i ~/.ssh/aruncouchbase.pem ubuntu@ec2-54-153-101-215.us-west-1.compute.amazonaws.com 'docker service tasks cb-mds-index'

ID NAME SERVICE IMAGE LAST STATE DESIRED STATE NODE

58jxojx32nf66jwqwt7nyg3cf cb-mds-index.1 cb-mds-index couchbase Running 6 minutes ago Running ip-172-31-14-15

> ssh -i ~/.ssh/aruncouchbase.pem ubuntu@ec2-54-153-101-215.us-west-1.compute.amazonaws.com 'docker service tasks cb-mds-data'

ID NAME SERVICE IMAGE LAST STATE DESIRED STATE NODE

af9zpuh6956fcih0sr70hfban cb-mds-data.1 cb-mds-data couchbase Running 6 minutes ago Running ip-172-31-14-14

> ssh -i ~/.ssh/aruncouchbase.pem ubuntu@ec2-54-153-101-215.us-west-1.compute.amazonaws.com 'docker service tasks cb-mds-query'

ID NAME SERVICE IMAGE LAST STATE DESIRED STATE NODE

ceqaza4xk02ha7t1un60jxtem cb-mds-query.1 cb-mds-query couchbase Running 6 minutes ago Running ip-172-31-14-13

|

This shows the services are nicely distributed across different nodes. Feel free to check out if the task is indeed scheduled on the node with the right label.

All Couchbase instances can be configured in a cluster to provide a complete database solution for your web, mobile and IoT applications.

Want to learn more?

- Docker Swarm Mode

- Couchbase on Containers

- Follow us on @couchbasedev or @couchbase

- Ask questions on Couchbase Forums

Source: blog.couchbase.com/2016/july/labels-constraints-docker-daemon-service