Does your organization want to simplify Database DevOps?

Is your database becoming a bottleneck to innovate rapidly?

Do you want to save millions of $$ in database licensing cost?

Read on!

State of Database DevOps

State of Database DevOps is a survey on DevOps adoption rates among SQL Server professionals. Over 1000 SQL Server professionals responded to the survey. The respondents came from across the globe and represent a wide range of job roles, company sizes and industries.

There are some good findings in the survey results. A few key findings worth highlighting here:

the greatest challenge with integrating database changes into a DevOps process would still be synchronizing application and database changes

Another one …

The greatest drawback identified with traditional siloed database development is the increased risk of failed deployments or downtime when introducing changes. This is closely followed by slow development and release cycles and the inability to respond quickly to changing business requirements

And another one …

Increasing the speed of delivery of database changes and freeing developers up to do more value added work are the key drivers for automating the delivery of database changes

The challenges highlighted here are not mentioned in the context of SQL server only, but would be applicable towards any relational database. You may be using Oracle, Postgres, MySQL, MariaDB or any other relational database for that matter and would be very much facing these issues. Why?

Why is Relational not well suited for Database DevOps?

It’s common for an application to operate on data from multiple tables in a RDBMS. For example, placing an order may use Customer, Order and Product tables. Each table has multiple columns with standard data types specific to a database. Tables may have primary, reference and foreign key constraints. Developers building applications using a relational database typically use an Object Relational Mapper (ORM), such as Hibernate or Java Persistence API for Java developers. There are similar ORM for other languages as well. ORMs captures the underlying complex database structure and allow programmers to build applications naturally using their language.

ORMs also use a persistence provider and allows your application to be independent of the underlying database. This persistence provider creates a binding between the language specific class to the database structure. For example, it maps a class to a table or multiple tables, binds the language data types to the types defined in the database and captures the relationship between tables. Theoretically, a programmer can use a different persistence provider to use a different RDBMS for the application. But this is far from a practical experience!

Any database change requires the ORM classes to be updated otherwise the application may not work. For example, adding a new table may mean a new Java class or updating an existing class. Change of a data type in a column requires the class definition to be updated otherwise the application will not even compile. Adding a new column means adding a new field in the class. Any change requires the classes to be updated and the application needs to be repackaged.

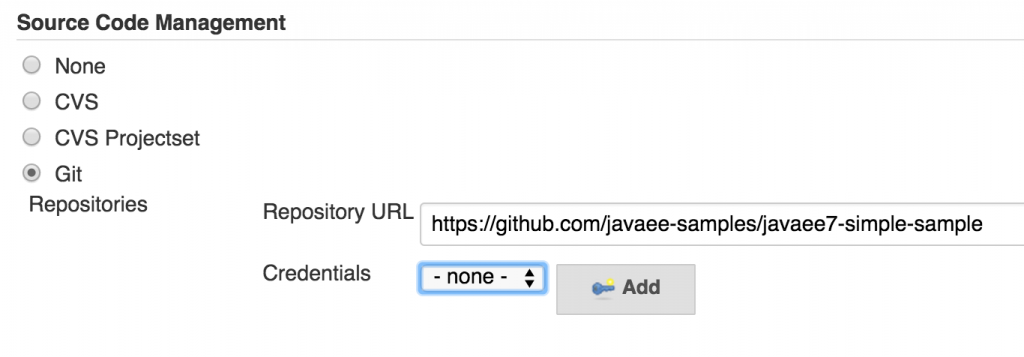

Changes in database structure are required all the time to meet the evolving needs of business. But if the DBAs make a database change and the ORM classes are not updated then there is a disconnect. Application deployment needs to be coordinated with the updating the database schema. There are tools like Flyway, Liquibase and others that integrate application and database deployment. But developers are often not allowed to make any direct changes to the production database. A disconnect would result in your application not working and the business to suffer. DevOps practices can definitely help solve these issues as it requires a close collaboration between developers that are building applications and DBAs that are updating database scripts.

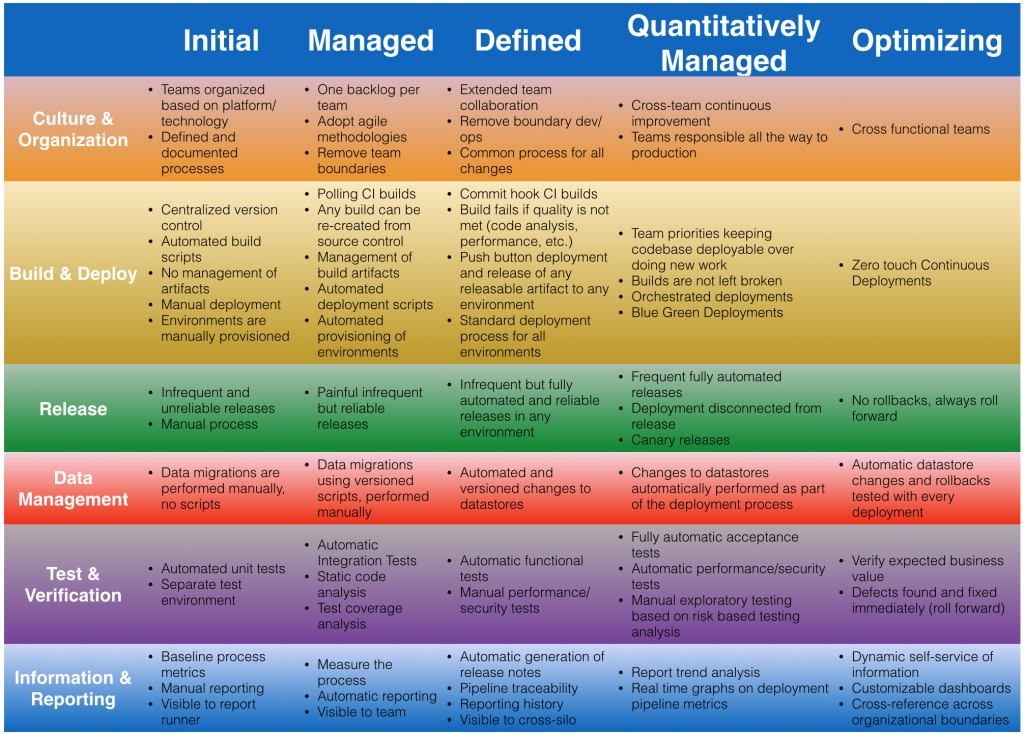

But as the survey reports, more than 50% of the respondents do not have DevOps adopted today.

There are challenges even if you were to integrate database changes into a DevOps process.

Synchronizing application and database changes where the ORM classes need to be synchronized with the backend database structure is the biggest challenge. DBAs may want to structure the database in a certain way which may not be optimal for application development. Applying consistency across application and database development is the next major challenge for ensuring a seamless database DevOps.

A siloed development has serious issues on your ability to rapidly innovate and deliver value to your business.

As shown in this image, failed deployments when introducing changes, slow development/release cycles and inability to respond to business needs account for over 60% of the drawbacks.

Speed of delivery of database changes is the biggest concern for database DevOps.

So what do you do?

How does NoSQL simplify Database DevOps?

NoSQL document database helps to simplify database DevOps!

How does NoSQL simplify database DevOps?

- Schema flexibility – Developers need a single database that can store rapidly changing structured, semi-structured and unstructured data. NoSQL document database offers schema flexibility by allowing developers operate directly on JSON data and derive meaning out of it

- No impedance mismatch – With no ORM for the application, there is no impedance mismatch between domain classes and database structure. Only the application code needs to be updated and no coordination is required with the schema changes

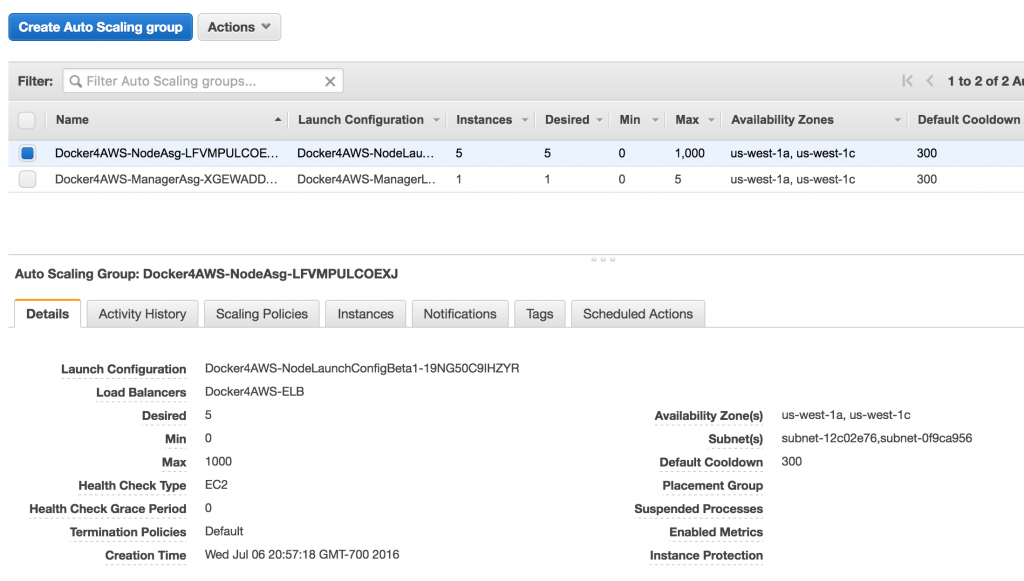

- Scalability – One of the drawbacks mentioned in the report is the inability to adapt to changing business requirements. This highlights scalability as a major DevOps challenge. If the volume of data, the number of queries, or the types of indexes required to support the application changes the database needs to change to accommodate those changes. Not in weeks or months, but today! No SQL databases run on commodity hardware and has a scale-out architecture as opposed to scale-up with RDBMS. Sharding can help with scalability in RDBMS but that’s an extra complexity that now need to be dealt with.

Learn more about why enterprises move to NoSQL.

Which NoSQL database is preferred by GE, Marriott, Verizon, United, LinkedIn, DIRECTV and many others?

What are some other advantages of Couchbase?

- Homogeneous distributed architecture – no master/slave

- SQL-like query language to query JSON documents

- Auto-sharding using vBuckets

- Memory-first architecture reduces the need for an additional caching layer

- Cross-data center replication

- Multiple SDKs

- Database on server or mobile device, with a complete synchronization between them

- Different container orchestration frameworks

NoSQL is not a panacea by any means. If you are building a system that needs complex transaction logic or real-time data warehousing, then RDBMS may be a better fit. However it addresses your scalability and agility concerns and simplifies database DevOps.

Here is a great video on migrating from relational database to NoSQL:

Here is another interesting video that shows why Marriott transitioned from Relational to NoSQL:

A lot more videos are available on Couchbase Connect 2016.

And some more relevant blogs:

- Making the Shift from Relational to NoSQL: How to Get Started (whitepaper)

- Moving from Oracle database to Couchbase

- Moving from MySQL to Couchbase

- Moving from SQL Server to Couchbase – Part 1 (data modeling), Part 2 (data migration), Part 3 (coming)

- Moving from MongoDB to Couchbase

- Migrate your MongoDB with Mongoose REST API to Couchbase with Ottoman

- Migrating from Relational Database to Couchbase

- Top 5 reasons companies replace Oracle with Couchbase

Talk to us:

- Couchbase Developer Portal

- Couchbase Forums

- @couchhasedev and @couchbase

Source: blog.couchbase.com/nosql-simplifies-database-devops/

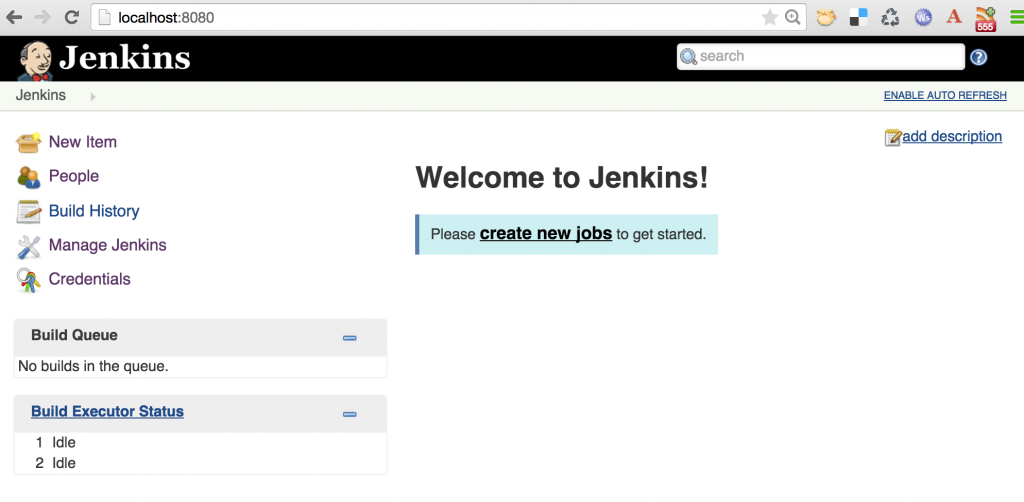

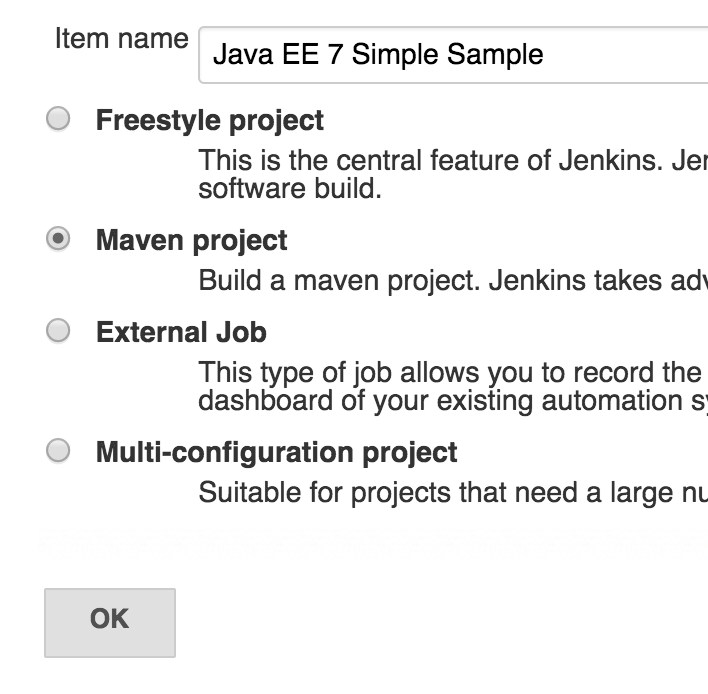

Click on “OK”.

Click on “OK”.