A key feature of Kubernetes is its ability to maintain the “desired state” using declared primitives. Replication Controllers is a key concept that helps achieve this state.

A replication controller ensures that a specified number of pod “replicas” are running at any one time. If there are too many, it will kill some. If there are too few, it will start more.

Lets take a look on how to spin up a Replication Controller with two replicas of a Pod. Then we’ll kill one pod and see how Kubernetes will start another Pod automatically.

Start Kubernetes Cluster

- Easiest way to start a Kubernetes cluster on a Mac OS is using Vagrant:

1234export KUBERNETES_PROVIDER=vagrantcurl -sS https://get.k8s.io | bash - Alternatively, Kubernetes can be downloaded from github.com/GoogleCloudPlatform/kubernetes/releases/download/v1.0.0/kubernetes.tar.gz, and cluster can be started as:

12345cd kubernetesexport KUBERNETES_PROVIDER=vagrantcluster/kube-up.sh

Start and Verify Replication Controller and Pods

- All configuration files required by Kubernetes to start Replication Controller are in kubernetes-java-sample project. Clone the workspace:

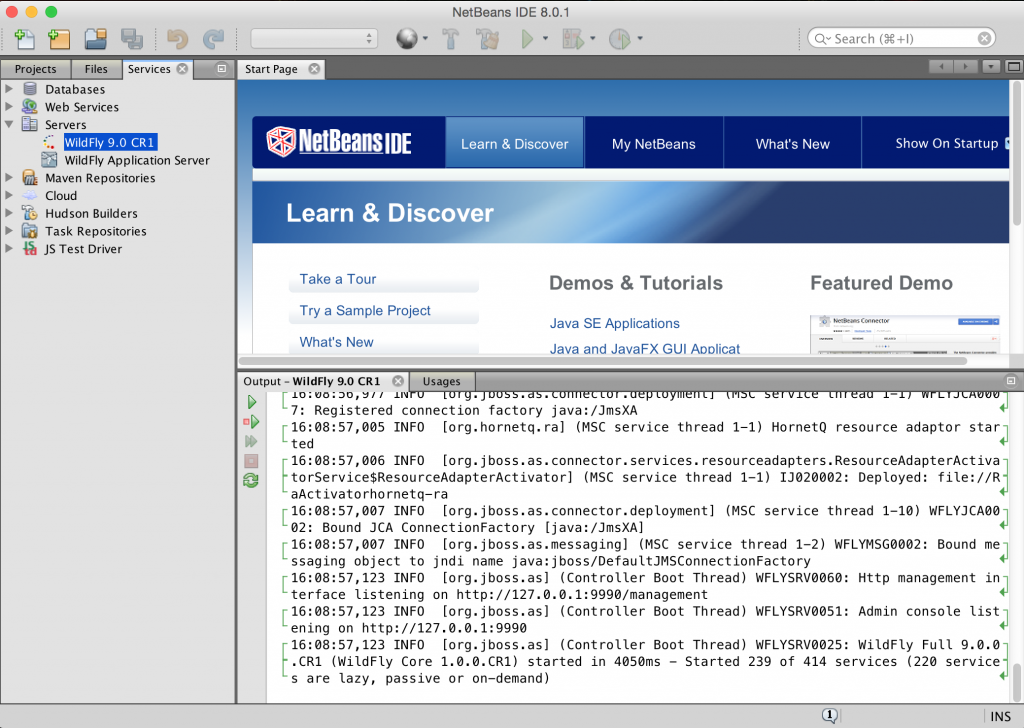

123git clone https://github.com/arun-gupta/kubernetes-java-sample.git - Start a Replication Controller that has two replicas of a pod, each with a WildFly container:

The configuration file used is shown:1234./cluster/kubectl.sh create -f ~/workspaces/kubernetes-java-sample/wildfly-rc.yamlreplicationcontrollers/wildfly-rc

Default WildFly Docker image is used here.1234567891011121314151617181920apiVersion: v1kind: ReplicationControllermetadata:name: wildfly-rclabels:name: wildflyspec:replicas: 2template:metadata:labels:name: wildflyspec:containers:- name: wildfly-rc-podimage: jboss/wildflyports:- containerPort: 8080 - Get status of the Pods:

Notice12345678910111213./cluster/kubectl.sh get -w poNAME READY STATUS RESTARTS AGEwildfly-rc-15xg5 0/1 Pending 0 2swildfly-rc-d5fbs 0/1 Pending 0 2sNAME READY STATUS RESTARTS AGEwildfly-rc-15xg5 0/1 Pending 0 5swildfly-rc-d5fbs 0/1 Pending 0 5swildfly-rc-d5fbs 0/1 Running 0 8swildfly-rc-15xg5 0/1 Running 0 8swildfly-rc-d5fbs 1/1 Running 0 15swildfly-rc-15xg5 1/1 Running 0 15s-wrefreshes the status whenever there is a change. The status changes from Pending to Running and then Ready to receive requests. - Get status of the Replication Controller:

If multiple Replication Controllers are running then you can query for this specific one using the label:12345./cluster/kubectl.sh get rcCONTROLLER CONTAINER(S) IMAGE(S) SELECTOR REPLICASwildfly-rc wildfly-rc-pod jboss/wildfly name=wildfly 2

12345./cluster/kubectl.sh get rc -l name=wildflyCONTROLLER CONTAINER(S) IMAGE(S) SELECTOR REPLICASwildfly-rc wildfly-rc-pod jboss/wildfly name=wildfly 2 - Get name of the running Pods:

123456./cluster/kubectl.sh get poNAME READY STATUS RESTARTS AGEwildfly-rc-15xg5 1/1 Running 0 36mwildfly-rc-d5fbs 1/1 Running 0 36m - Find IP address of each Pod (using the name):

And of the other Pod as well:1234./cluster/kubectl.sh get -o template po wildfly-rc-15xg5 --template={{.status.podIP}}10.246.1.18

1234./cluster/kubectl.sh get -o template po wildfly-rc-d5fbs --template={{.status.podIP}}10.246.1.19 - Pod’s IP address is accessible only inside the cluster. Login to the minion to access WildFly’s main page hosted by the containers:

1234567891011121314151617181920212223kubernetes> vagrant ssh minion-1Last login: Tue Jul 14 21:35:12 2015 from 10.0.2.2[vagrant@kubernetes-minion-1 ~]$ curl http://10.246.1.18:8080<!--~ JBoss, Home of Professional Open Source.. . .</div></body></html>[vagrant@kubernetes-minion-1 ~]$ curl http://10.246.1.19:8080<!--~ JBoss, Home of Professional Open Source.~ Copyright (c) 2014, Red Hat, Inc., and individual contributors. . .</div></body></html>

Automatic Restart of Pods

Lets delete a Pod and see how a new Pod is automatically created.

|

1

2

3

4

5

6

7

8

9

10

11

12

|

kubernetes> ./cluster/kubectl.sh delete po wildfly-rc-15xg5

pods/wildfly-rc-15xg5

kubernetes> ./cluster/kubectl.sh get -w po

NAME READY STATUS RESTARTS AGE

wildfly-rc-0xoms 0/1 Pending 0 2s

wildfly-rc-d5fbs 1/1 Running 0 48m

NAME READY STATUS RESTARTS AGE

wildfly-rc-0xoms 0/1 Pending 0 11s

wildfly-rc-0xoms 0/1 Running 0 13s

wildfly-rc-0xoms 1/1 Running 0 21s

|

Notice how the Pod with name wildfly-rc-15xg5 was deleted and a new Pod with the name wildfly-rc-0xoms was created.

Finally, delete the Replication Controller:

|

1

2

3

|

kubectl.sh create -f ~/workspaces/kubernetes-java-sample/wildfly-rc.yaml

|

The latest configuration files and detailed instructions are at kubernetes-java-sample.

In real world, you’ll typically wrap this Replication Controller in a Service and front-end with a Load Balancer. But that’s a topic for another blog!

Enjoy!

ZooKeeper is an Apache project and provides a distributed, eventually consistent hierarchical configuration store.

ZooKeeper is an Apache project and provides a distributed, eventually consistent hierarchical configuration store.

Hail Spigot for reviving Bukkit, and updating to 1.8.3!

Hail Spigot for reviving Bukkit, and updating to 1.8.3!